A.I. detects COVID-19 on chest X-rays with accuracy and speed

Algorithm performed similar to a consensus of thoracic radiologists

- Link to: Northwestern Now Story

- Algorithm was trained, tested on the largest COVID-era dataset (17,002 X-ray images)

- Algorithm analyzed X-ray images of lungs about 10 times faster, 1-6% more accurately than individual specialized radiologists

- Algorithm is now publicly available for other researchers to continue to train it with new data

- System could also potentially flag patients for isolation and testing who are not otherwise under investigation for COVID-19

- ‘It would take seconds to screen a patient and determine if they need to be isolated,’ researcher says

EVANSTON, Ill. — Northwestern University researchers have developed a new artificial intelligence (A.I.) platform that detects COVID-19 by analyzing X-ray images of the lungs.

Called DeepCOVID-XR, the machine-learning algorithm outperformed a team of specialized thoracic radiologists — spotting COVID-19 in X-rays about 10 times faster and 1-6% more accurately.

The researchers believe physicians could use the A.I. system to rapidly screen patients who are admitted into hospitals for reasons other than COVID-19. Faster, earlier detection of the highly contagious virus could potentially protect health care workers and other patients by triggering the positive patient to isolate sooner.

The study’s authors also believe the algorithm could potentially flag patients for isolation and testing who are not otherwise under investigation for COVID-19.

The study was published today (Nov. 24) in the journal Radiology.

“We are not aiming to replace actual testing,” said Northwestern’s Aggelos Katsaggelos, an A.I. expert and senior author of the study. “X-rays are routine, safe and inexpensive. It would take seconds for our system to screen a patient and determine if that patient needs to be isolated.”

“It could take hours or days to receive results from a COVID-19 test,” said Dr. Ramsey Wehbe, a cardiologist and postdoctoral fellow in A.I. at the Northwestern Medicine Bluhm Cardiovascular Institute. “A.I. doesn’t confirm whether or not someone has the virus. But if we can flag a patient with this algorithm, we could speed up triage before the test results come back.”

Katsaggelos is the Joseph Cummings Professor of Electrical and Computer Engineering in Northwestern’s McCormick School of Engineering. He also has courtesy appointments in computer science and radiology. Wehbe is a postdoctoral fellow at Bluhm Cardiovascular Institute at Northwestern Memorial Hospital.

A trained eye

For many patients with COVID-19, chest X-rays display similar patterns. Instead of clear, healthy lungs, their lungs appear patchy and hazy.

“Many patients with COVID-19 have characteristic findings on their chest images,” Wehbe said. “These include ‘bilateral consolidations.’ The lungs are filled with fluid and inflamed, particularly along the lower lobes and periphery.”

The problem is that pneumonia, heart failure and other illnesses in the lungs can look similar on X-rays. It takes a trained eye to tell the difference between COVID-19 and something less contagious.

Katsaggelos’ laboratory specializes in using A.I. for medical imaging. He and Wehbe had already been working together on cardiology imaging projects and wondered if they could develop a new system to help fight the pandemic.

“When the pandemic started to ramp up in Chicago, we asked each other if there was anything we could do,” Wehbe said. “We were working on medical imaging projects using cardiac echo and nuclear imaging. We felt like we could pivot and apply our joint expertise to help in the fight against COVID-19.”

A.I. vs. human

To develop, train and test the new algorithm, the researchers used 17,002 chest X-ray images — the largest published clinical dataset of chest X-rays from the COVID-19 era used to train an A.I. system. Of those images, 5,445 came from COVID-19-positive patients from sites across the Northwestern Memorial Healthcare System.

The team then tested DeepCOVID-XR against five experienced cardiothoracic fellowship-trained radiologists on 300 random test images from Lake Forest Hospital. Each radiologist took approximately two-and-a-half to three-and-a-half hours to examine this set of images, whereas the A.I. system took about 18 minutes.

The radiologists’ accuracy ranged from 76-81%. DeepCOVID-XR performed slightly better at 82% accuracy.

“These are experts who are sub-specialty trained in reading chest imaging,” Wehbe said. “Whereas the majority of chest X-rays are read by general radiologists or initially interpreted by non-radiologists, such as the treating clinician. A lot of times decisions are made based off that initial interpretation.”

“Radiologists are expensive and not always available,” Katsaggelos said. “X-rays are inexpensive and already a common element of routine care. This could potentially save money and time — especially because timing is so critical when working with COVID-19.”

Limits to diagnosis

Of course, not all COVID-19 patients show any sign of illness, including on their chest X-rays. Especially early in the virus’ progression, patients likely will not yet have manifestations on their lungs.

“In those cases, the A.I. system will not flag the patient as positive,” Wehbe said. “But neither would a radiologist. Clearly there is a limit to radiologic diagnosis of COVID-19, which is why we wouldn’t use this to replace testing.”

The Northwestern researchers have made the algorithm publicly available with hopes that others can continue to train it with new data. Right now, DeepCOVID-XR is still in the research phase, but could potentially be used in the clinical setting in the future.

Study coauthors include Jiayue Sheng, Shinjan Dutta, Siyuan Chai, Amil Dravid, Semih Barutcu and Yunan Wu — all members of Katsaggelos’ lab — and Drs. Donald Cantrell, Nicholas Xiao, Bradly Allen, Gregory MacNealy, Hatice Savas, Rishi Agrawal and Nishant Parekh — all radiologists at Northwestern Medicine.

Multimedia Downloads

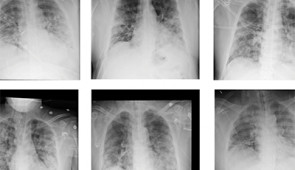

X-ray samples

Sample of most representative images from different classes of DeepCOVID-XR predictions relative to the reference standard. Credit: Northwestern University

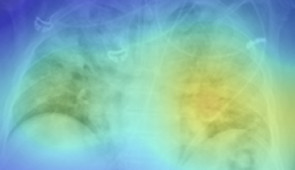

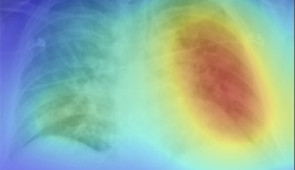

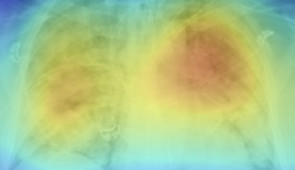

Heat maps

Grad-CAM heat maps of feature importance for positive COVID-19 prediction. Credit: Northwestern University

Interview the Experts

Ramsey Wehbe

First author

Cardiologist and postdoctoral fellow in A.I.